Assessing the Security Risks of an AI Solution During Procurement

Nikoloz Kokhreidze

Learn how to effectively assess the security risks of AI solutions during procurement. Our comprehensive guide covers risk identification, assessment, mitigation strategies, and best practices for secure AI adoption.

Did you know that while 64% of businesses expect AI to increase productivity, only 25% of companies have a comprehensive AI security strategy in place?

As businesses increasingly adopt artificial intelligence (AI) solutions to enhance their decision-making processes, your security teams to thoroughly analyze and identify potential risks associated with these technologies.

When your organization is considering purchasing an AI solution, the security team plays a vital role in ensuring that the system aligns with the company's security requirements and does not introduce unacceptable risks. In this post, we will explore a comprehensive approach to assessing the security risks of an AI solution during the procurement process.

Understand the AI Solution

The first step in assessing the security risks of an AI solution is to gain a deep understanding of its purpose, functionality, and architecture. This involves gathering detailed information about the solution, including:

- Its intended use cases

- The algorithms and models employed

- The underlying infrastructure

- Data sources and types of data processed and stored

- Integration points and dependencies with existing systems

Identify Potential Security Risks

Once you have a clear understanding of the AI solution, the next step is to identify the potential security risks associated with it. This process involves a comprehensive analysis of various aspects of the system, as summarized in the table below:

| Security Risk | Description |

|---|---|

| Data Privacy and Protection | Assess the sensitivity of the data processed and evaluate data handling practices to ensure compliance with regulations (e.g., GDPR, HIPAA). |

| Algorithmic Bias and Fairness | Examine the AI model for potential biases that may lead to discriminatory or unfair decisions. |

| Model Integrity and Robustness | Evaluate the AI model's resilience against adversarial attacks and manipulations, and assess its performance and accuracy in real-world scenarios. |

| Transparency and Explainability | Determine the level of transparency and interpretability of the AI model's decisions, especially in regulated industries where accountability is crucial. |

| Access Control and Authentication | Evaluate access control mechanisms and assess authentication and authorization processes to prevent unauthorized access and maintain data confidentiality. |

| Integration and Interoperability | Analyze security risks arising from integrating the AI solution with existing systems, and consider the compatibility and security of the interfaces. |

Conduct Risk Assessment

After identifying the potential security risks, the next step is to conduct a thorough risk assessment. Here's a step-by-step process to follow:

- Evaluate the likelihood and impact of each identified risk.

- Prioritize risks based on their severity and potential consequences to the business.

- Consider the specific context of your organization, including its risk appetite, regulatory requirements, and business objectives.

- Document the findings and recommendations from the risk assessment.

- Communicate the results to relevant stakeholders.

Develop Mitigation Strategies

Based on the risk assessment results, your security team should collaborate with necessary stakeholders to develop mitigation strategies. This involves proposing security controls and measures to effectively reduce the likelihood or impact of the risks.

Examples of mitigation strategies that you should consider:

- Implementing data encryption and access controls

- Establishing monitoring and auditing mechanisms

- Developing policies, training programs, and incident response plans

Create an action plan to prioritize and implement the recommended security measures.

Is Security Blocking Your Next Enterprise Deal?

Let's discuss how fractional CISO services can unlock your pipeline without the full-time overhead.

Engage with Stakeholders

Effective risk mitigation requires collaboration and communication with various stakeholders. The security team should:

- Engage with the AI solution provider to discuss the identified security concerns and work together to implement necessary controls and safeguards

- Involve internal stakeholders, such as IT, legal, and compliance teams, to ensure alignment with business requirements and regulations

- Present the security risks and mitigation strategies to executives and technical teams in a clear and compelling manner

Clear communication and collaboration among all parties will define the success of your risk management.

Continuous Monitoring and Review

Assessing the security risks of an AI solution is not a one-time exercise. As the AI system evolves (integrating with new products and processes) and the threat landscape changes, it is crucial to establish a process for ongoing monitoring and assessment of the solution's security posture.

- Conduct regular reviews and updates of the risk assessment based on changes in the AI solution, emerging threats, and shifts in business requirements

- Establish metrics and key performance indicators (KPIs) to measure the effectiveness of the security controls and track progress over time

- Perform periodic security audits and penetration testing to identify and address any new vulnerabilities or weaknesses

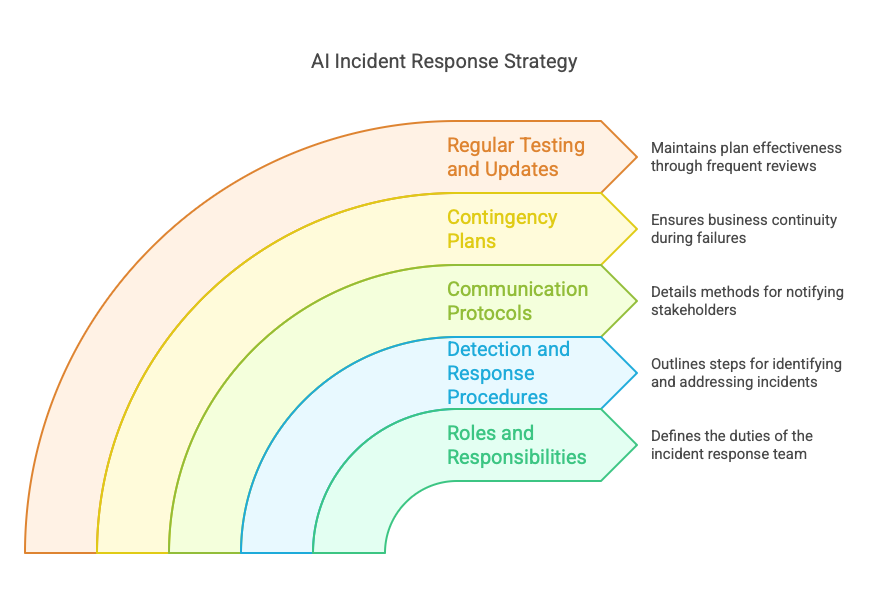

Incident Response and Contingency Planning

Despite the best efforts to mitigate risks, security incidents can still occur. Therefore, it is essential to develop an incident response plan specific to the AI solution.

The plan should define:

- Roles and responsibilities of the incident response team

- Procedures for detecting, investigating, and responding to security incidents

- Communication protocols for notifying relevant stakeholders

Additionally, establish contingency plans to ensure business continuity in case of AI solution failures or breaches. Regularly test and update the incident response and contingency plans to maintain their effectiveness.

Is Security Blocking Your Next Enterprise Deal?

Let's discuss how fractional CISO services can unlock your pipeline without the full-time overhead.

Best Practices and Lessons Learned

Here are some key takeaways and practical advice for organizations looking to securely adopt AI solutions:

- Start with a clear understanding of the AI solution's purpose, functionality, and architecture

- Identify and prioritize potential security risks based on their likelihood and impact

- Develop and implement appropriate mitigation strategies and security controls

- Foster collaboration and communication among stakeholders, including the AI solution provider and internal teams

- Establish a process for continuous monitoring, review, and improvement of the AI solution's security posture

- Develop and regularly test incident response and contingency plans

By following these best practices, you can proactively address security risks and utilize AI while maintaining the confidentiality, integrity, availability and non-repudiation of your systems and data.

Conclusion

Assessing the security risks of an AI solution during the procurement process is a critical responsibility of the security team. By following a comprehensive approach that includes understanding the solution, identifying potential risks, conducting risk assessments, developing mitigation strategies, engaging with stakeholders, continuous monitoring, and incident response planning, organizations can make informed decisions and ensure the secure adoption of AI technologies.

As always, security is an ongoing process, and regular reviews and updates are necessary to keep pace with the evolving threat landscape and changing business requirements.