How MCP Creates AI Superpowers That Bypass Traditional Security Models

Nikoloz Kokhreidze

The Model Context Protocol lets AI access multiple systems simultaneously, creating security risks most organizations aren't prepared for. Learn the strategic framework needed for proper MCP governance.

Every security leader knows the basics: isolate critical systems, check every access request, watch all traffic. Then along comes a protocol that rewrites these rules completely.

The Model Context Protocol (MCP) isn't just another way to connect systems. It's a whole new security approach that gives AI access privileges like never before. While your security team works on stronger walls, MCP builds bridges between areas that were once kept separate.

I've build and utilized various MCP servers in past months tracking how this protocol changes security landscapes. What I found will change how you think about AI management:

MCP creates what I call "identity confusion" – where it's hard to tell if actions come from AI or humans in ways our current security can't handle.

This matters a lot for your organization. By the time you finish reading, you'll understand:

- Why traditional access management breaks when AI systems use MCP

- How security boundaries disappear when AI connects across system permissions

- What governance structures need to change to handle this shift

MCP lets AI systems access multiple data sources at once, using permissions in ways your security team never planned for. This creates risks most organizations aren't prepared to handle.

The Universal Remote for AI

Before we dive into security issues, let's clarify what the Model Context Protocol actually does.

The Model Context Protocol (MCP) offers a standard way for AI models to connect with outside data sources and tools. Think of it as a universal remote that lets AI assistants work with various systems without special coding for each connection.

Introduced by Anthropic in late 2024 and quickly adopted by OpenAI and others, MCP solves a big engineering challenge – connecting many AI systems with many tools. Instead of building custom connections for each combination, MCP creates one consistent protocol for all connections.

This clever solution has led to rapid adoption. The ecosystem now includes thousands of community-driven servers connecting AI to everything from GitHub and Slack to payment systems and databases.

But this convenience comes with security costs most organizations aren't ready for.

How MCP Transforms AI Capabilities

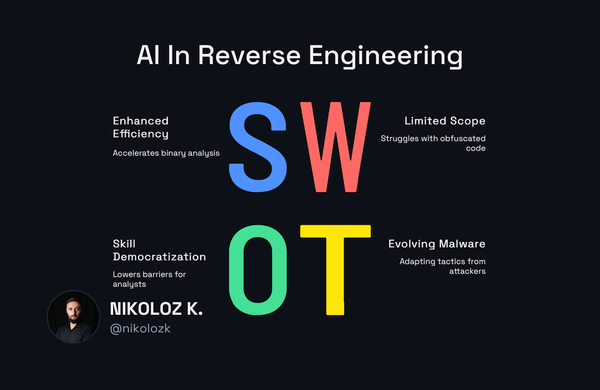

MCP gives AI three basic superpowers that traditional security wasn't designed to handle:

- Identity Amplification: AI can act through multiple identity contexts at once

- Context Consolidation: AI can access information across security boundaries

- Permission Persistence: AI actions keep privileges across system transitions

These abilities solve real business problems. Rather than building many separate connections for each service, MCP standardizes how your components share data and actions with any AI-based workflow.

But this business value comes with security impacts that go beyond traditional models.

Identity and Access Management Disruption

Traditional access management assumes a direct connection between users and permissions. MCP breaks this model by creating a new type of digital entity that doesn't fit existing frameworks.

The Consolidated Super-User Problem

MCP creates a "super-user" when an AI assistant connects to multiple systems. Unlike humans who work in one context at a time, AI systems can request data from a CRM, change records in HR, and trigger financial transactions all at once.

This creates a whole new security risk. An AI agent can gather privileges across different systems, bypassing the careful role structures you created for enterprise systems.

Consider a real scenario where an AI assistant helping with HR onboarding can access:

- Candidate information in the hiring system

- Salary data in the HR system

- Team budgets in the financial system

- Code repositories for project assignments

None of your human HR staff would have all this access. Yet through MCP, the AI can span these systems with a single query.

Your carefully designed access controls assume humans with specific roles. MCP-enabled AI doesn't fit these assumptions, creating security gaps you might not even see.

Identity Masquerading and Confusion

The second disruption involves what I call "identity masquerading." MCP-enabled AI often operates under service accounts or through delegated permissions that blur responsibility.

MCP needs a clear identity model. We need to know who is making the call and define what tools from the MCP server are allowed.

But most organizations lack this clarity. When an AI takes action through MCP, current security monitoring can't clearly attribute that action to a specific person. Was it the AI making a decision? The user who started the conversation? The developer who set up the system? This creates serious compliance and audit problems.

Zero Trust Collision

Zero Trust architecture is the gold standard for modern security. Its core idea – never trust, always verify – should protect against MCP risks. But the reality is more complex.

How MCP Both Enables and Undermines Zero Trust

MCP actually supports Zero Trust principles by creating standard access points with verification opportunities. When implemented properly, MCP can define strict boundaries with authentication for every interaction.

But here's the paradox: MCP also undermines Zero Trust by blurring the line between user and AI agent identity.

Traditional Zero Trust assumes:

- Clear, verifiable identity for each request

- Context-specific access with minimal privileges

- Continuous verification of authentication

MCP challenges the first assumption by creating mixed identities. An MCP-enabled AI might make requests that combine the user's identity with service account permissions and third-party API access.

The Challenge of Continuous Verification

Zero Trust requires constant verification, but MCP creates new challenges by adding a layer between the requesting entity (AI) and the systems being accessed.

Consider a typical MCP workflow where an AI assistant processes a user request:

- The user asks a question requiring data from multiple systems

- The AI decides which systems to query through MCP

- MCP servers run these queries using stored credentials

- The AI combines the results to generate a response

When should verification happen? At the user's initial request? The AI's decision to access specific systems? When the MCP server runs each query?

Current Zero Trust implementations struggle with this nested verification chain.

The Unbounded Context Problem

Perhaps the biggest security shift MCP creates is what I call the "unbounded context problem" – AI can access data across previously isolated contexts at the same time.

Context Awareness That Exceeds Human Capabilities

When a human employee accesses information, they work within a single context at a time. This creates natural boundaries. The finance employee doesn't see HR data. The HR employee doesn't see support tickets.

MCP-enabled AI breaks these natural boundaries. An AI agent can build context from financial data, HR records, support tickets, and engineering plans – all within a single session.

This ability creates value by helping the AI make connections across previously separated areas. But it also creates unprecedented security risks.

When AI assistants gain access to sensitive files, databases, or services via MCP, organizations must ensure those interactions are secure, authenticated, and tracked.

Your security model assumes information will stay in its own lane. MCP-enabled AI brings it all together, creating combinations you never planned for.

Unintended Information Exposure

The unbounded context problem leads to accidental information exposure when AI systems combine data in ways security architects never expected.

For example, an AI answering a simple question about "department productivity" might combine:

- Sales figures from the CRM

- Employee performance data from HR

- Project timelines from engineering

- Customer satisfaction metrics from support

None of these systems alone contains sensitive combinations. But together, they might reveal confidential business plans or individual performance issues not meant for everyone to see.

This isn't malicious. The AI is just doing what it was designed to do – creating connections across available information. But when MCP enables access across traditionally separate systems, these connections can breach security boundaries.

Invisible Threat Surface

MCP creates a new type of threat that traditional security monitoring tools can't detect – the invisible connections between previously isolated systems.

The Pathway Problem in Security Monitoring

Security teams watch network traffic, API calls, and database access. But MCP creates logical pathways between systems that don't show up in these traditional monitoring tools.

When an AI assistant uses MCP to access multiple systems for a single task, the connections between those systems exist only in the AI's context – not in any network logs your security team might check.

For instance, an AI helping with budget planning might:

- Pull current expenses from the finance system

- Review team growth plans from HR

- Access project roadmaps from engineering

- Check industry trends from market research databases

Each individual access might look fine in system logs. But the combination – the pathway between these systems that exists only in the AI's context – represents a potential security risk that's invisible to current monitoring tools.

Audit Trails and Chain-of-Thought Challenges

Modern security requires clear audit trails. But MCP introduces what I call the "chain-of-thought" challenge – understanding the reasoning that led an AI to access specific systems in a particular order.

MCP lacks good monitoring mechanisms. Without robust tracking frameworks and standard logging methods, finding unusual patterns, preventing system failures, and addressing potential security incidents becomes difficult.

This creates a basic governance challenge: how do you audit what you can't see?

Governance Without Precedent

All these challenges create a governance problem with no historical comparison. How do you govern a system that crosses identity boundaries, works across contexts, and creates invisible connections between isolated systems?

The Lack of Established Frameworks

There are no established frameworks for governing AI system permissions across MCP connections. This is new territory.

MCP lacks a standardized framework for managing authentication and authorization across different clients and servers. Without a unified way to verify identities and control access, it's hard to enforce detailed permissions, especially in environments with multiple tenants.

Traditional governance approaches fall short because they assume:

- Clear boundaries between systems

- Static permission models

- Human-centered identity frameworks

- Visible connection paths between systems

MCP challenges all of these assumptions.

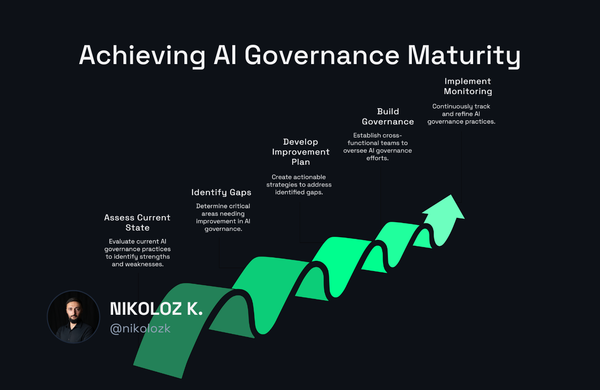

A Strategic Framework for MCP Governance

Based on my analysis, organizations need a new governance framework specifically designed for MCP-enabled AI systems. This framework should include:

- AI-Specific Identity Models: Develop identity frameworks that recognize AI as a distinct actor type with different characteristics than human users.

- Context-Aware Access Controls: Implement permissions that consider the combination of data access rather than just individual system access.

- Cross-System Monitoring: Build monitoring capabilities that track information flow across system boundaries through AI-mediated connections.

- Intent-Based Governance: Shift from action-based permissions to intent-based permissions that consider why an AI is accessing information, not just what it's accessing.

- Human Oversight Triggers: Define clear thresholds for when AI actions require human verification before execution.

Your existing security governance wasn't built for AI that can use multiple identities and access patterns at once. Building AI-specific governance isn't optional – it's necessary.

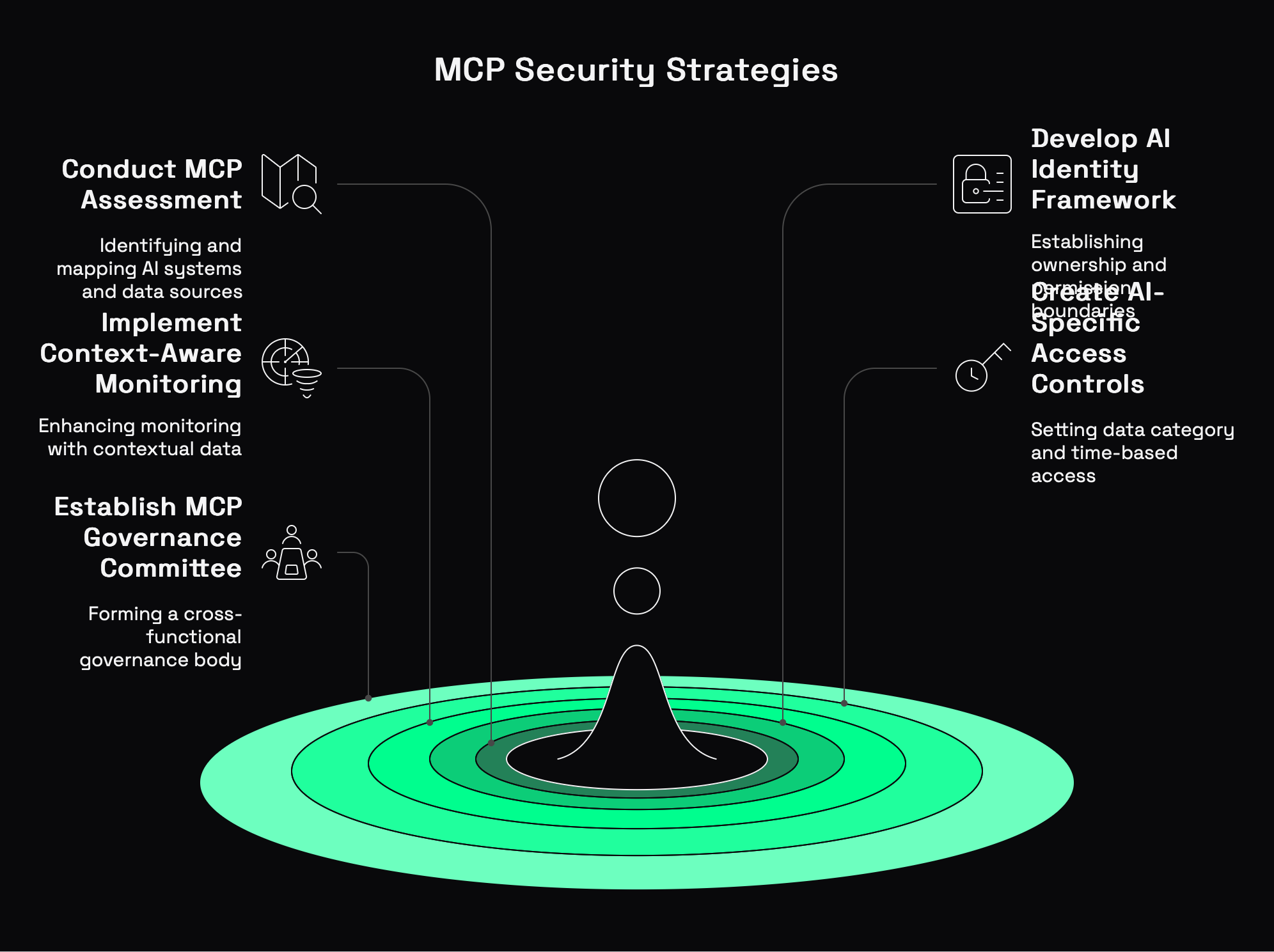

Strategic Recommendations

The security implications of MCP require a strategic response. Here are five actionable recommendations for security leaders:

1. Conduct an MCP Assessment

Begin by identifying all AI systems in your organization that use or plan to use MCP. Map the data sources and systems each AI can access through MCP connections. This visibility is essential before implementing any controls.

2. Develop an AI Identity Framework

Create a dedicated identity framework for AI systems that:

- Clearly defines ownership and responsibility for each AI system

- Sets permission boundaries based on business function, not technical capability

- Creates specific review processes for cross-domain access requests

3. Implement Context-Aware Monitoring

Traditional security monitoring isn't enough for MCP-enabled systems. Implement context-aware monitoring that:

- Logs the complete context of each AI interaction

- Tracks information flow between previously isolated systems

- Identifies unusual data combination patterns that might indicate security risks

4. Create AI-Specific Access Controls

Your existing access management tools weren't designed for MCP-enabled AI. Develop AI-specific access controls that:

- Set boundaries based on data categories rather than just systems

- Include time-based access limitations for sensitive information

- Require progressive authentication for increasingly sensitive actions

5. Establish an MCP Governance Committee

MCP governance requires cross-functional expertise. Establish a dedicated governance committee that includes:

- Security leadership

- Data privacy experts

- AI ethics specialists

- Business function representatives

- Legal and compliance experts

This committee should develop and maintain MCP-specific security policies, review access requests, and respond to emerging risks.

The Bottom Line

MCP represents a fundamental shift in how AI systems interact with your organization's data and systems. This shift creates unprecedented security challenges that go beyond traditional models.

The "identity confusion" MCP creates – where AI actions blend human and machine permissions – requires a new security mindset. Organizations that fail to adapt will face increasing risks as MCP adoption accelerates.

But those who embrace this new reality have an opportunity to use MCP's power while establishing appropriate safeguards. By developing AI-specific governance frameworks, implementing context-aware controls, and establishing clear oversight mechanisms, security leaders can enable responsible MCP adoption.

The choice isn't whether to use MCP – that ship has sailed as major AI providers embrace the standard. The choice is whether you'll govern MCP deliberately or allow it to evolve without oversight.

Given the superpowers MCP creates – identity amplification, context consolidation, and permission persistence – deliberate governance isn't optional. It's essential.

Are you ready for the new security reality?