The Perils of Platform Dependence: Lessons from the Great CrowdStrike Meltdown

Nikoloz Kokhreidze

The CrowdStrike Falcon Sensor update on July 19, 2024 caused global system outages, exposing risks of single vendor reliance. Learn why a resilient hybrid approach, open architectures and unified management is needed for effective cybersecurity architectures.

It's time we had a frank chat about what just went down in the world. On July 19th, 2024, we witnessed what I'm calling the "Great CrowdStrike Meltdown" - an event that's shaken the foundations of our industry and left many of us questioning our approach to security architecture.

What Happened?

CrowdStrike, a name we've all come to trust in the endpoint protection game, pushed out an update that turned into a nightmare. Their Falcon Sensor, typically a robust defender against threats, became the very thing it was designed to protect against.

The result? A tsunami of Blue Screens of Death (BSODs) swept across Windows 10 machines globally. We're talking about entire fleets of workstations and servers going down.

The impact was staggering:

- In Australia, one team reported a fleet of 50,000+ machines affected

- Malaysia saw 70% of laptops down and stuck in boot

- New Zealand experienced company-wide outages impacting servers and workstations

- Major US airlines grounded flights, airports in Europe faced disruptions, and even Sky News in the UK couldn't broadcast

Talk about a bad day at the office!

A History of Hiccups

Now, before we get too caught up in the CrowdStrike drama, let's remember this isn't the first rodeo for security software snafus:

- April 2010: McAfee bricked enterprise PCs worldwide

- December 2010: AVG caused Windows 7 to crash

- July 2012: Symantec's signature update crashed Windows XP

- And let's not forget Microsoft's own BSOD-inducing updates

The reason everyone is talking about CrowdStrike meltdown now is the scale of the customer base that CrowdStrike apparently has.

Technical Details

From what we know so far, the problematic Falcon Sensor update included invalidly formatted channel update files C-00000291*.sys, which caused the top-level CrowdStrike driver to crash, resulting in a flood of BSODs. You can find more in-depth analysis in CrowdStrike’s blog post.

It's still unclear how or why CrowdStrike delivered these faulty files (on Friday mind you), but one thing is certain: the impact is going to be massive. In fact, I believe this incident will go down in history as the biggest 'cyber' event ever in terms of sheer scope and scale.

Why? Because the recovery process is incredibly difficult and time-consuming. To fix the issue, IT teams need to:

- Boot each affected machine into safe mode

- Log in as a local admin

- Manually delete the problematic files

While both CrowdStrike and Microsoft are working on automating the process, it's not easily automatable. We're talking about a painstaking, machine-by-machine slog that could take days or even weeks for large organizations.

For a more detailed explanation of the outage, including insights into Kernel and User interactions and how boot drivers (such as CrowdStrike Falcon sensor) can cause BSODs, refer to this YouTube video from a former Windows developer.

The Ripple Effect: Beyond Just Downtime

And that's just the technical side of things. The business impact is going to be even more staggering:

- Unprecedented Downtime: With entire fleets of machines out of commission, many organizations are facing near-total work stoppages. The productivity losses are going to be astronomical.

- Customer and Partner Fallout: For companies that rely on their IT systems to serve customers or collaborate with partners, this outage is nothing short of catastrophic. Expect to see a wave of broken SLAs, angry customers, and strained business relationships.

- Compliance and Regulatory Risks: For organizations in heavily regulated industries like healthcare and finance, extended downtime can put them at risk of falling out of compliance with various regulations. This could lead to hefty fines and even legal action.

- Healthcare at Risk: One US healthcare IT worker reported 400,000 endpoints and servers out of commission. In an environment where human lives depend on technology, this incident can easily become catastrophic.

- Financial Impact: CrowdStrike's stock took a nosedive on 19 July, dropping over 12% (20% at some point). We will have to see how many other market leaders will be impacted.

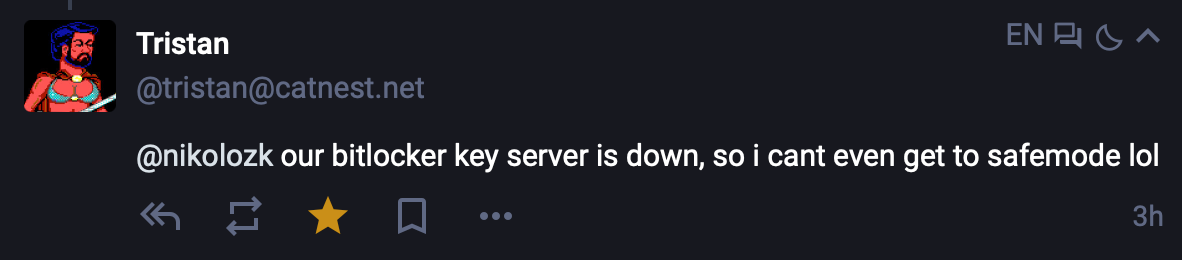

- Access Nightmares: Some lost access to their Bitlocker key servers, leaving them unable to even boot into safe mode.

Make no mistake: CrowdStrike is going to be in very hot water over this. The fallout from this incident is going to be felt for months, if not years, to come.

But as cybersecurity leaders, our job isn't to point fingers - it's to learn from this incident and take steps to ensure that something like this never happens again. And that starts with a hard look at our own reliance on single-vendor solutions.

The Single-Vendor Trap

We, as an industry, have become too reliant on single-vendor solutions. We've been seduced by the promise of integrated platforms, sacrificing resilience for convenience.

I get the appeal and I am guilty of this too. Unified dashboards, seamless integration, simplified management - it all sounds great on paper. But as we've just seen, it creates a single point of failure that can bring entire organizations to their knees.

Is Security Blocking Your Next Enterprise Deal?

Let's discuss how fractional CISO services can unlock your pipeline without the full-time overhead.

Cloud Conundrum

By moving to the Cloud, we have also put a massive trust in vendors' ability to test and verify their updates. Many organizations have "curbed" their testing of new vendor patches from security vendors or service providers because of this growing trust. As a result, auto-updates were enabled on these solutions, and some solutions do not even provide customers with options to test updates before deployment, as providers automatically deploy to all customers.

This shift in responsibility has led to a dangerous situation where organizations are at the mercy of their vendors. When a vendor pushes out a faulty update, as we saw with CrowdStrike, the consequences can be catastrophic. Organizations are left scrambling to recover, with little control over the situation.

Honestly, it's not about pointing fingers or assigning blame. It's about recognizing that in the complex world of cybersecurity, mistakes are inevitable. Crowdstrike will make mistakes, AWS will make mistakes, your teams will make mistakes. What matters is how we, as customers, are empowered to deal with the consequences.

The Dangerous Dichotomy: Best-of-Breed vs. Platform

For years, we've been told that we have to choose between the flexibility of best-of-breed solutions and the simplicity of integrated platforms. Vendors have pushed the narrative that platforms are the future, and that best-of-breed architectures are too complex and fragmented to be effective.

The CrowdStrike incident has exposed the fallacy of this dichotomy. We've seen firsthand that relying on a single platform, no matter how comprehensive, creates a Single Point of Failure (SPOF). When that platform fails, it takes everything down with it.

But the answer isn't to swing to the other extreme and wire together a patchwork of point solutions. That approach comes with its own challenges of integration, management, costs and potential security gaps.

Embracing a Hybrid Approach

I believe the path forward lies in embracing a hybrid approach that takes the best of both worlds. And I am fully aware that this is way easier said than done!

We need to create security architectures that are modular, flexible, and resilient, while still providing the integration and ease of management that platforms promise.

Here's what that could look like in practice:

- Defining Core Security Functions: Identify the core security functions that are absolutely critical to your organization (e.g., endpoint protection, network monitoring, threat intelligence).

- Selecting Best-of-Breed Tools: For each of these core functions, select the best-of-breed tool that meets your specific needs. Focus on tools with open architectures, robust APIs, and a track record of reliability.

- Building a Unified Management Layer: Implement a unified management layer that sits above these best-of-breed tools. This could be an in-house developed solution or a carefully selected vendor platform that prioritizes openness and interoperability.

- Ensuring Redundancy and Failover: For each core security function, ensure there is redundancy and failover. This might mean having multiple best-of-breed tools for the same function, or building in failover to a secondary platform.

- Continuously Testing and Adapting: Regularly test your hybrid architecture against various failure scenarios. Test new updates as they come before deployment. Be prepared to swap out tools or adjust your approach as new threats and technologies emerge.

Need a Fractional CISO?

Turn security from bottleneck into business enabler

13+ years building security programs across FinTech, FMCG & enterprise

The Need for Open Architectures and Customization

The decision to move forward with platform solutions and trust a single vendor depends on each organization's unique risk appetite. However, the current vendor landscape doesn't make it easy for organizations to tailor their defenses.

To address this, vendors must provide open architectures and easy cross-platform integration, allowing security teams to:

- Manage various cybersecurity solutions centrally through a custom-built or vendor-provided command center

- Use the same query languages for any EDR, reducing the need to train teams on multiple query languages (albeit via translation layer)

- Deploy the similar policies and detection mechanisms on different solutions

The truth is that often cybersecurity solutions are closed platforms, walled gardens where you are slowly getting locked in with a single vendor after each implementation of their "next-gen", "breach preventing" security solutions.

We need to demand open architectures and cross-platform integrations. Vendors must open up their solutions, and security teams should be able to integrate, manage and swap their solutions as needed.

The future of cybersecurity lies in engineering, and building is the cornerstone of it. Modern security teams have a business need to customize their security stack to address their use cases. Vendors who adapt to this growing need and are not afraid of customer diversification will conquer the market, while those with a decade-old mindset of locking things out will appeal to old-school CISOs who have not built anything except document stacks.

Collaborate and Innovate

Building a truly resilient and effective hybrid security architecture will require collaboration and innovation across our industry. We need to come together to:

- Define Open Standards: Work towards defining open standards for security tool interoperability and data exchange.

- Share Best Practices: Openly share our experiences, successes, and failures in implementing hybrid architectures.

- Work with Vendors for Change: Collectively work with vendors to adopt open architectures, provide better transparency, and support hybrid approaches.

- Foster Innovation: Encourage and invest in innovative solutions that bridge the gap between best-of-breed and platform approaches.

Final Thoughts

The debate between best-of-breed and platform solutions will continue, but by embracing a hybrid approach and demanding open architectures from vendors, we can create security architectures that are truly fit for purpose in the modern business landscape.

The Great CrowdStrike Meltdown served as a wake-up call for the industry to rethink its approach to security and relationships with vendors. As we move forward, we must recognize that there is no one-size-fits-all solution, and each organization has its own unique risk appetite, budget and security needs.

Embracing a hybrid approach and demanding open, customizable solutions from vendors will enable the creation of security ecosystems tailored to these unique needs. This journey will require a significant shift in mindset, investment, and a willingness to challenge the status quo.

I also think that as cybersecurity leaders, we must come together as a community, share our experiences and best practices, and collectively push for a new paradigm in cybersecurity that prioritizes resilience, adaptability, and customer empowerment.

The future of the industry is in our hands, and we must seize this opportunity to learn from the past, challenge the present, and build a more secure, more resilient future for all.